Welcome to the May AI Newsletter from the GovWebworks AI Lab. The following articles touch on important topics in the industry, including:

We hope you find this information as interesting as we do. If so, feel free to share with your colleagues and encourage them to sign up for the AI Newsletter and join the conversation! Or email us at ai@govwebworks.com to talk about using AI to optimize your organization’s digital goals.

#1: Microsoft’s Immersive Reader aims to boost reading comprehension

Key takeaway: Visual cues can help users to interact with complex government services.

Reviewed by Tom Lovering

Microsoft has made its Immersive Reader tool generally available for app integration via API. Previously available in some of Microsoft’s own products like Office Suite and Edge, Immersive Reader brings a range of audiovisual techniques to help people read more efficiently. By offering a Read Aloud feature and a variety of text formatting options the toolset is aimed at improving comprehension for readers with conditions like dyslexia, dysgraphia and ADHD.

As part of Microsoft’s larger offering of Azure Cognitive Services the latest version of Immersive Reader supports pre-translation and read aloud features in over 70 languages, as well as picture representation of words to increase comprehension.

Tools like Immersive Reader can significantly help users trying to interact with complex government services by offering in-app tools to render simplified language in manageable chunks, with appropriate visual cues to aid comprehension.

Microsoft gives devs an AI-powered tool to build reading accessibility into their apps

#2: Automated language translation for documents

Key takeaway: Low barrier to entry solutions exist for automating the translation of documents into multiple languages.

Reviewed by Adam Kempler

AI-based services like Amazon Translate and Google Translate provide organizations with the ability to offer the documents in multiple languages. These services and their pipelines can be integrated into existing content management systems, applications and pipelines. For example, as documents are uploaded by staff into the CMS, a pipeline could be triggered that automatically creates translated versions in specified languages and adds them to a workflow for review by staff.

These services can also be leveraged for real-time automated and semi-automated translation of user-entered content. For example, as your editorial staff is creating content in a CMS, integration with translation services from AWS, Google, and Azure Cognitive Services can automatically translate the content on a per-field basis into multiple languages, and like the documents, this can be part of a larger workflow of review and approval before publishing.

Create a serverless pipeline to translate large documents with Amazon Translate

#3: Document processing governance

Key takeaway: Service providers are getting more sophisticated in how they provide governance for the processing of documents.

Reviewed by Adam Kempler

Governance can be a critical part of a document processing pipeline. In previous posts, I’ve talked about how tools like NLP (Natural Language Processing) can be used to pull structured data out of unstructured documents. This structured data can then be stored and used for filtered and faceted searches. However sometimes it may be critical to record where that data came from (data lineage). For example, if financial numbers were being pulled from multiple documents, and then another internal process was doing calculations on those numbers and providing them to the next step in the pipeline, governance and data lineage tools can give transparency as to where those numbers originated. This type of tracking of the source of data can be important for both legal and credibility purposes, depending on the use-case.

Other types of governance that can be introduced include automated redaction or removal of PII information such as names, email addresses, and social security numbers as content and documents are processed. The processing pipeline could even create multiple versions of a document, each accessible to different roles. For example, an original for users with appropriate permissions, and augmented/annotated/redacted versions for users with different permissions.

Intelligent governance of document processing pipelines for regulated industries

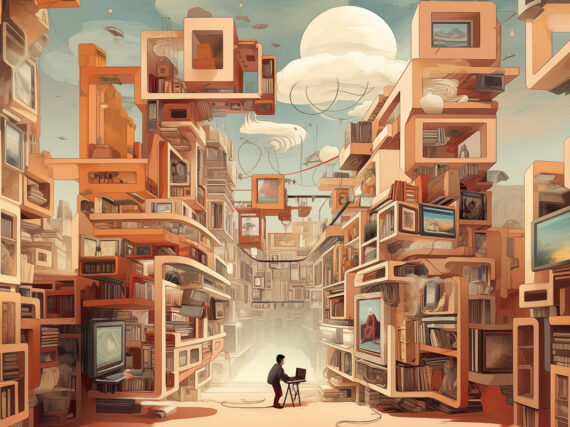

#4: Automatic captioning of images is getting a lot better

Key takeaway: New advances in automated image captioning will provide better captions and improved media discovery.

Reviewed by Adam Kempler

Automated image captioning provides a summary description of an image. This differs from image tagging in that it provides more context and describes details about what is going on in an image such as “A bunch of bicycles parked along the bridge.” Whereas tagging just provide keywords for the image such as “bicycle” and “bridge”.

Finding available datasets to train the AI has created limitations for image captioning. As a result, traditional image and caption datasets have a single image associated with a single caption. Now Google has introduced a new “Crisscrossed Captions” dataset of images and captions that relates a caption to multiple images, an image to multiple captions, and captions to captions. This allows for a much higher level of learning and provides the capability for richer discovery of related media, because now AI can more easily understand what images are related to each other based on the captions. Additionally, it can perform better at writing captions because it has more semantic understanding of the relationship to different captions.

Crisscrossed Captions: Semantic similarity for images and text

Learn more

- Sign up for the AI Newsletter for a roundup of the latest AI-related articles and news delivered to your inbox

- Find out more about using AI to optimize your organization’s digital goals